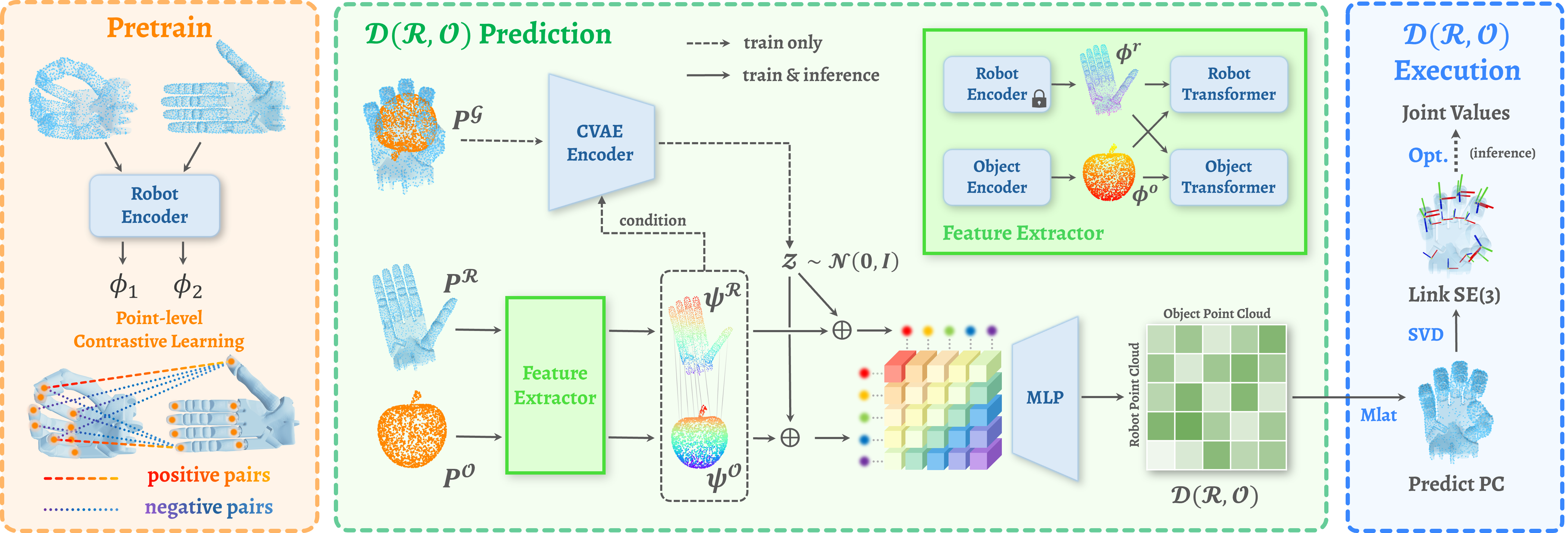

Pipeline Overview

Dexterous grasping is a fundamental yet challenging skill in robotic manipulation, requiring precise interaction between robotic hands and objects. In this paper, we present $\mathcal{D(R,O)}$ Grasp, a novel framework that models the interaction between the robotic hand in its grasping pose and the object, enabling broad generalization across various robot hands and object geometries. Our model takes the robot hand’s description and object point cloud as inputs and efficiently predicts kinematically valid and stable grasps, demonstrating strong adapt ability to diverse robot embodiments and object geometries. Extensive experiments conducted in both simulated and real world environments validate the effectiveness of our approach, with significant improvements in success rate, grasp diversity, and inference speed across multiple robotic hands. Our method achieves an average success rate of 87.53% in simulation in less than one second, tested on three different dexterous robotic hands, and also performs successfully in real-world experiments using LeapHand. $\mathcal{D(R,O)}$ Grasp provides a robust solution for dexterous grasping in complex and varied environments.

Barrett

Allegro

ShadowHand

Grasp

Observation

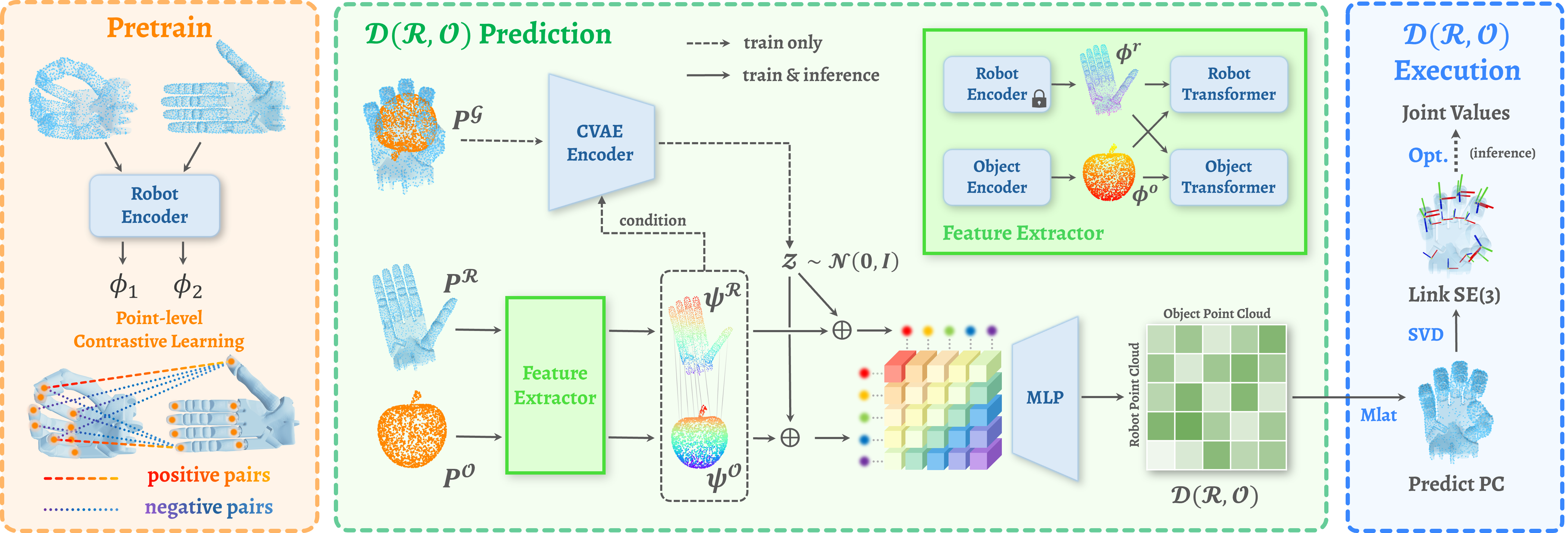

Apple

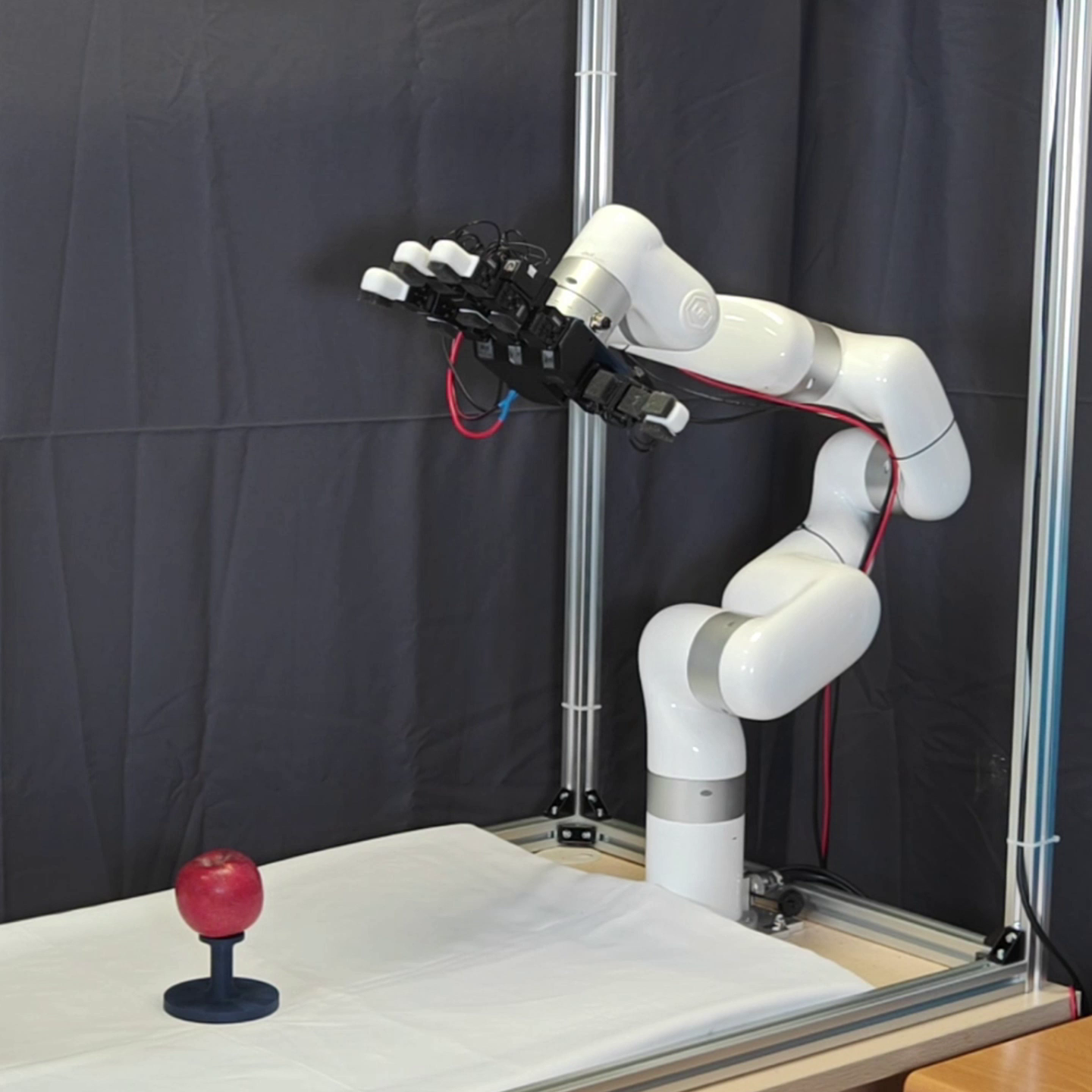

Bag

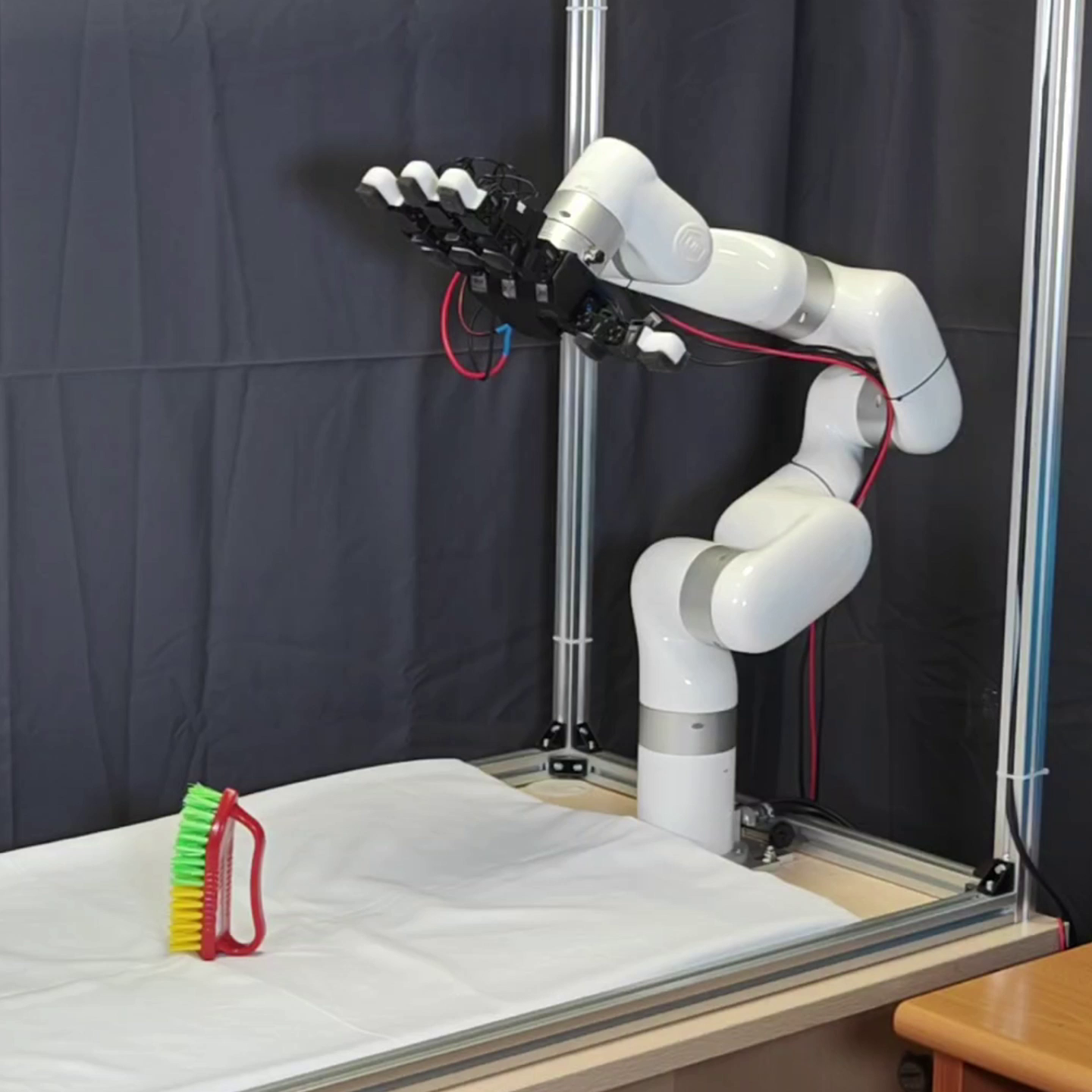

Brush

Cookie Box

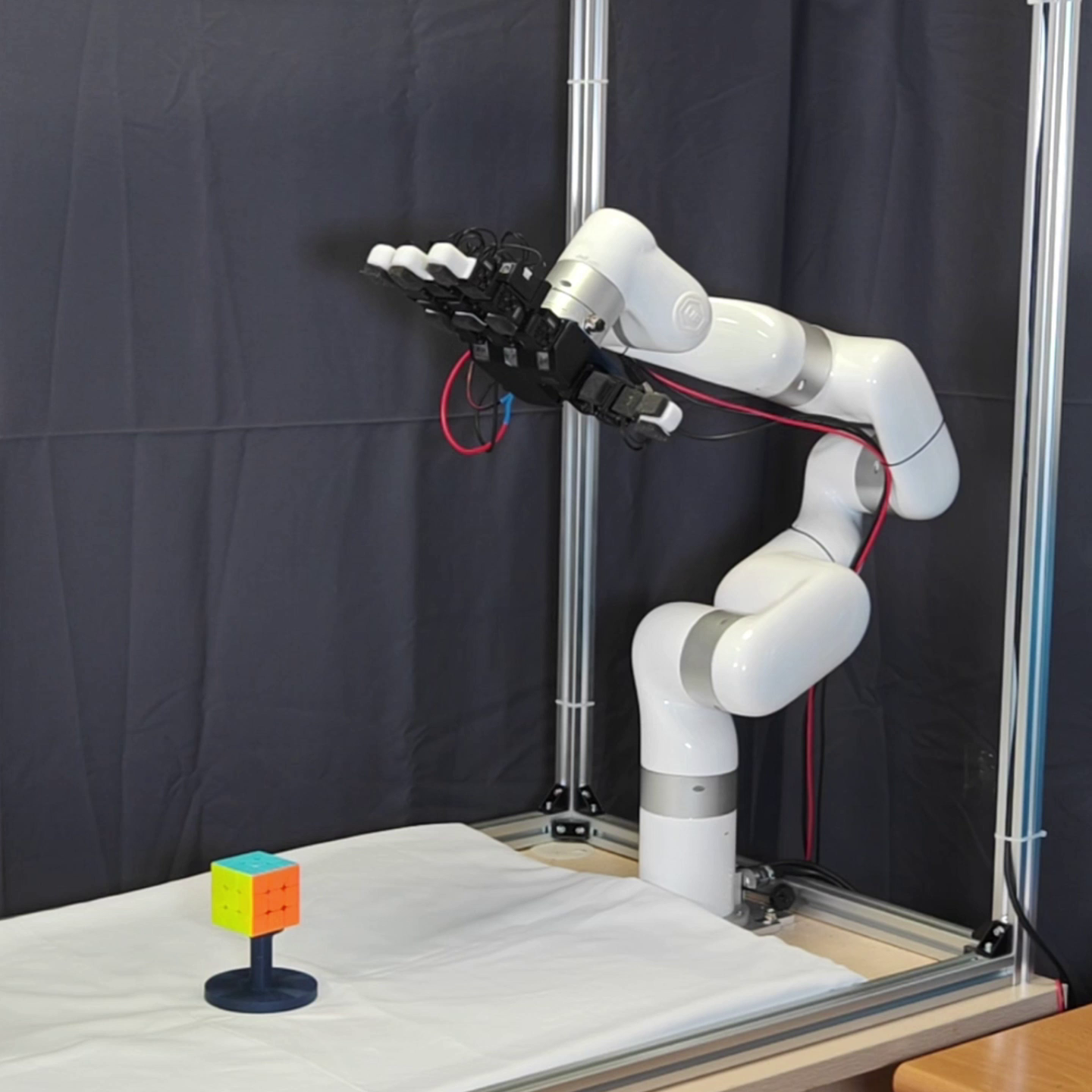

Cube

Cup

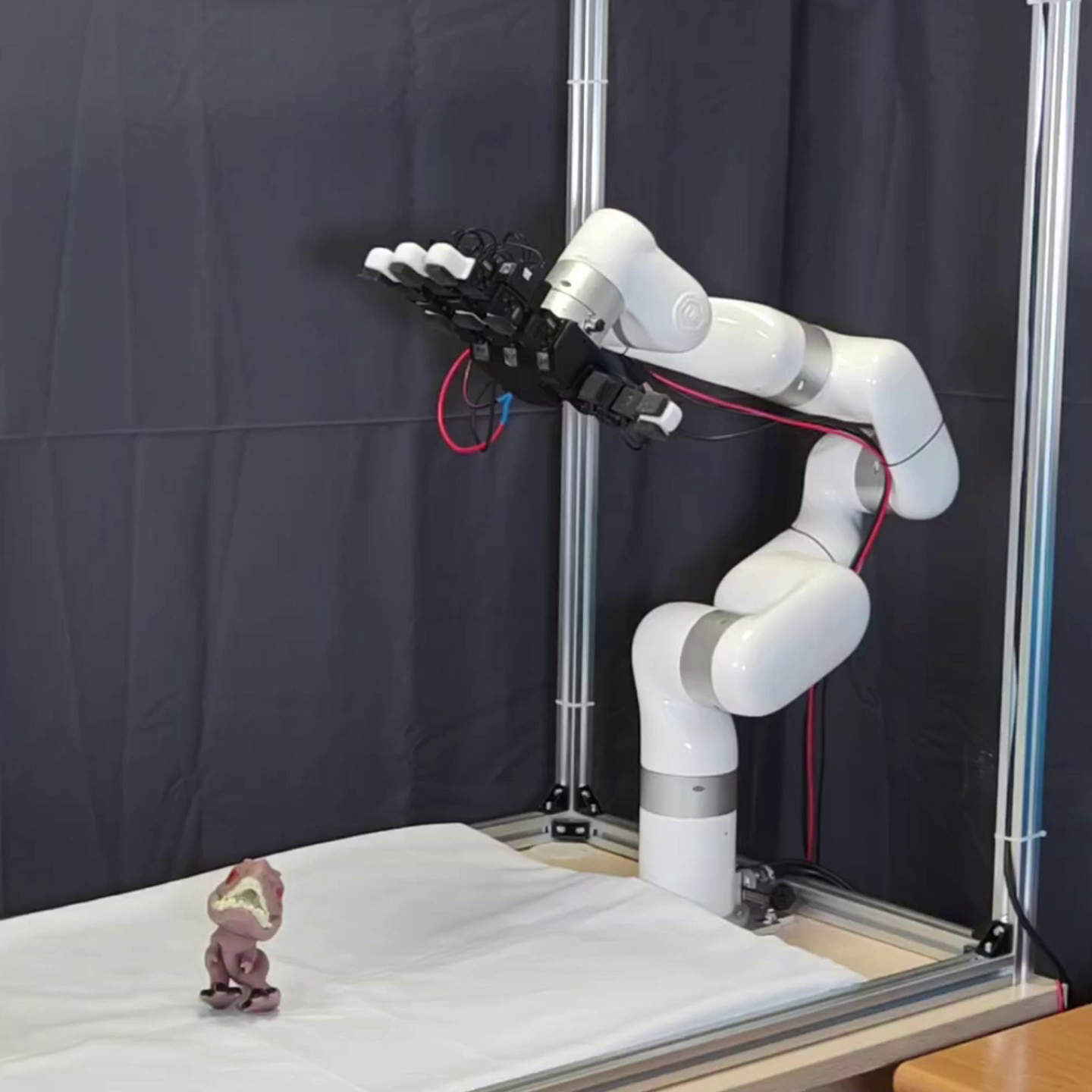

Dinosaur

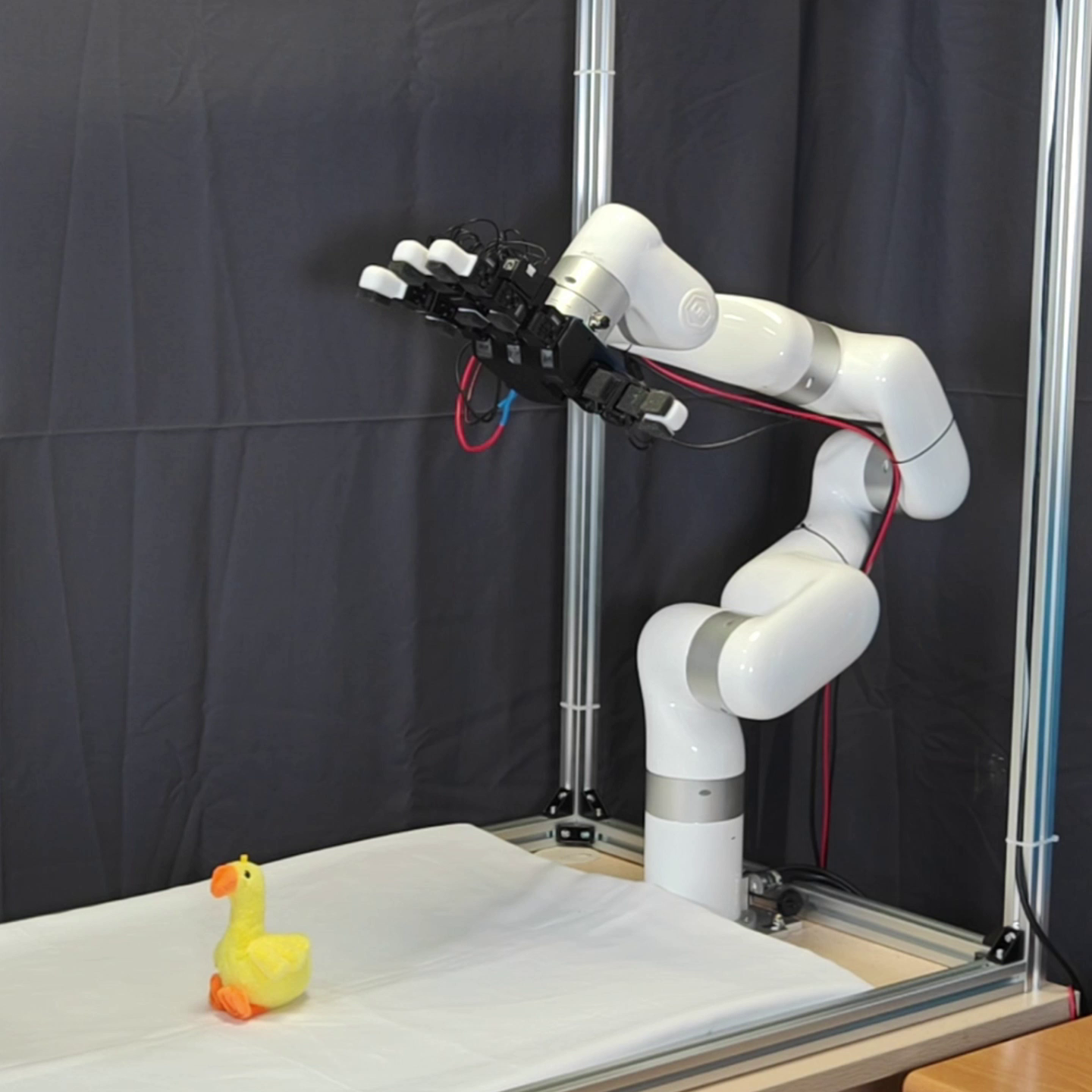

Duck

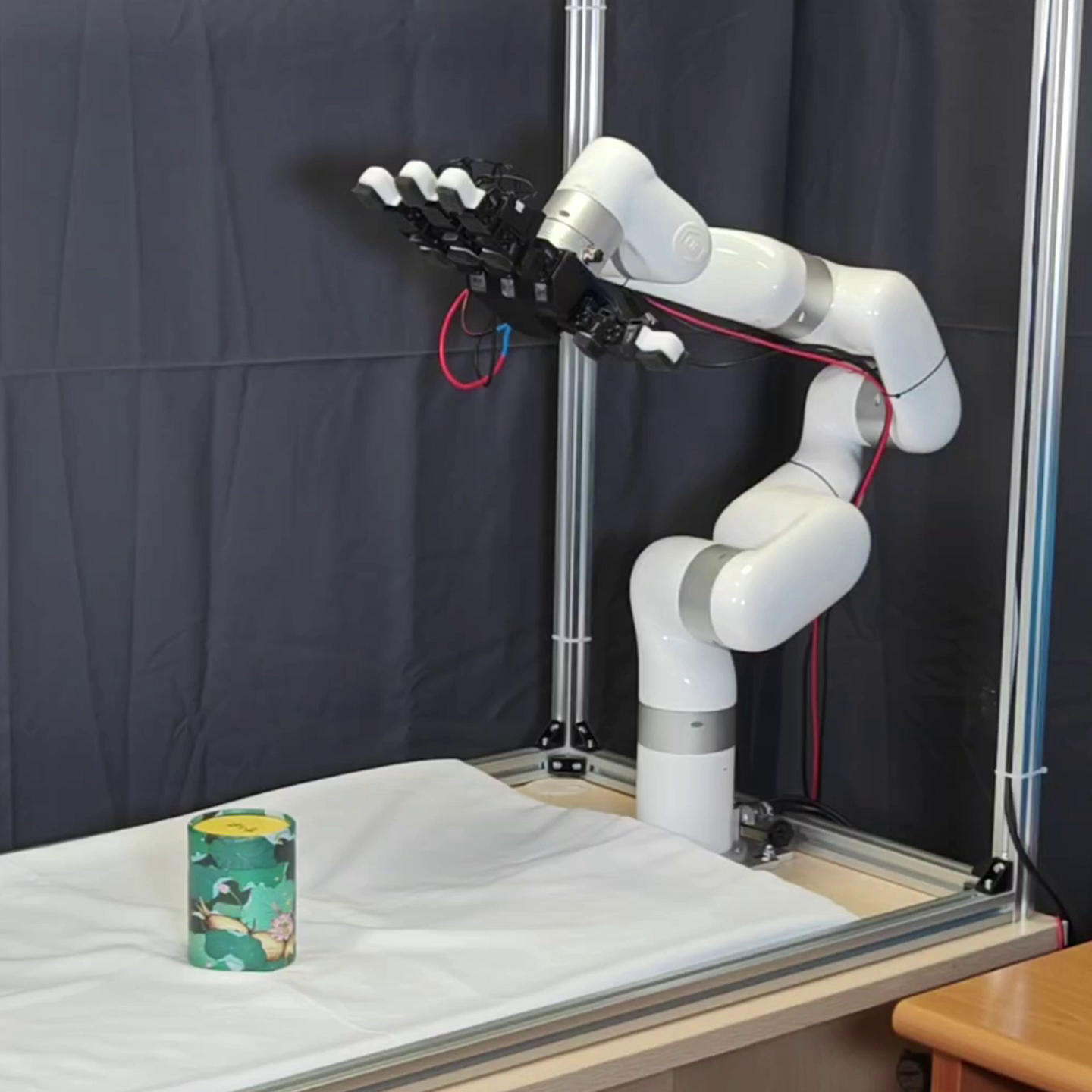

Tea Box

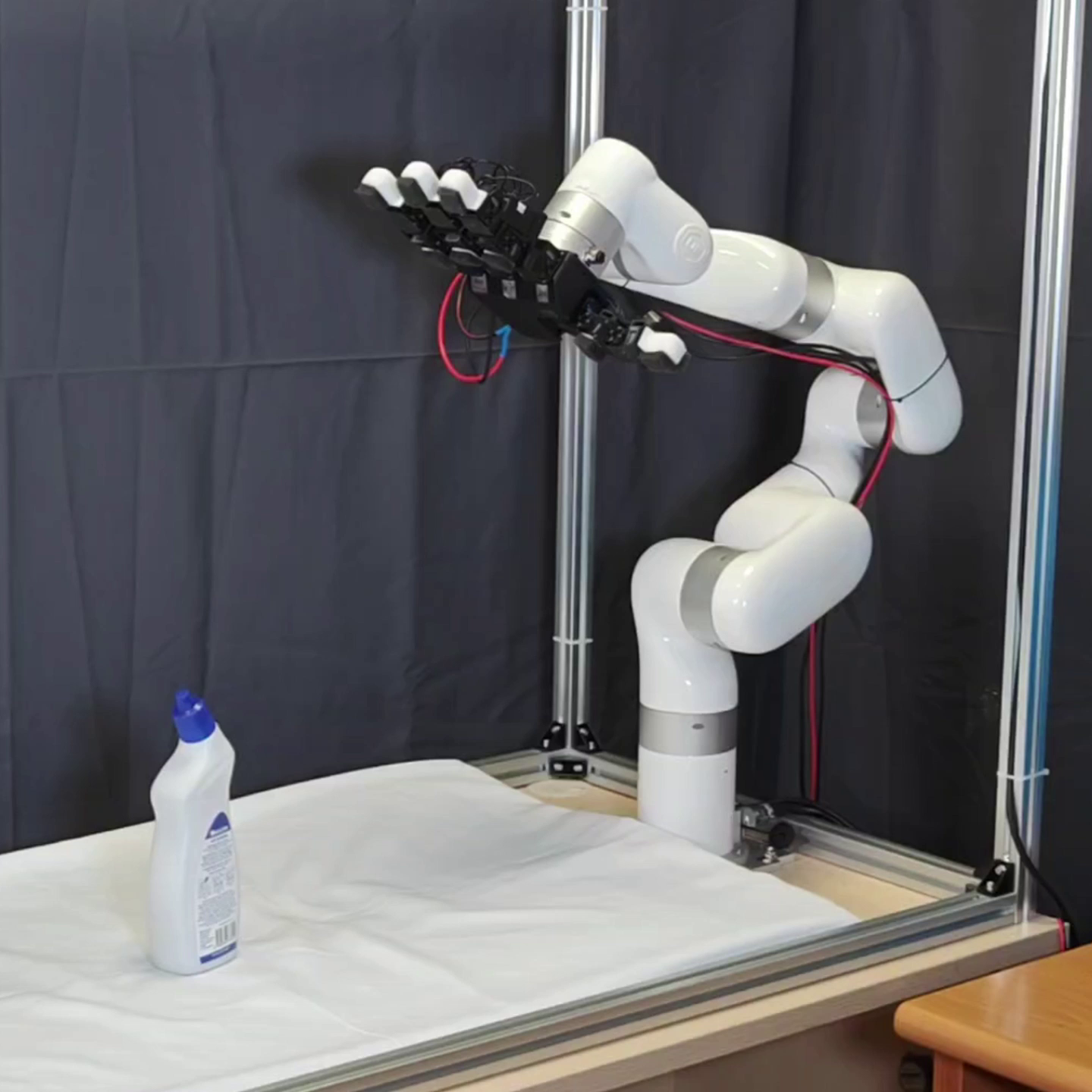

Toilet Cleaner

@INPROCEEDINGS{wei2024dro,

author={Wei, Zhenyu and Xu, Zhixuan and Guo, Jingxiang and Hou, Yiwen and Gao, Chongkai and Cai, Zhehao and Luo, Jiayu and Shao, Lin},

booktitle={2025 IEEE International Conference on Robotics and Automation (ICRA)},

title={$\mathcal{D}(\mathcal{R}, \mathcal{O})$ Grasp: A Unified Representation of Robot and Object Interaction for Cross-Embodiment Dexterous Grasping},

year={2025},

volume={},

number={},

pages={4982-4988},

keywords={Hands;Geometry;Point cloud compression;Adaptation models;Computational modeling;Grasping;Predictive models;Computational efficiency;Robots;Videos},

doi={10.1109/ICRA55743.2025.11127754}

}